Retrieval Augmented Generation (RAGs) are becoming popular as most use cases require LLMs to have private information and the ability to search over unstructured data like PDFs, PPTs, private websites, and other documents. As the name suggests, Retrieval is the first step i.e. to retrieve relevant information. Among the techniques available, Vector search has proven itself as the go-to method to create a retriever for LLMs. In this post, we will discuss about Google’s Semantic Search within Vertex AI Agent builder (Vertex AI Search) and how it competes against Vector Search.

- What are Vector Stores?

- Creating and querying a Vector Store in BigQuery

- What is Vertex AI Agent builder?

- Creating a search agent

- Comparison

- Summary / TLDR

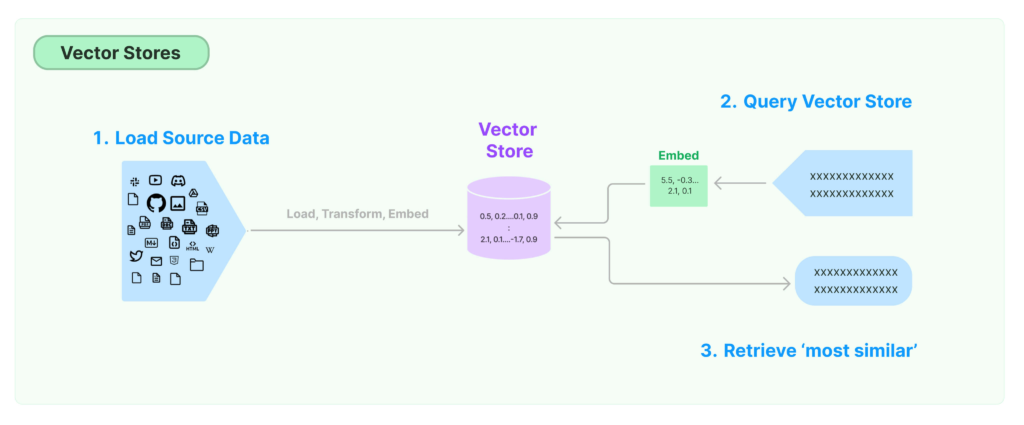

1. What are Vector Stores?

Vector store is usually an end to end solution to store, embed, and search for similar vectors quickly. For instance, in a document retrieval system, a vector store could be used to quickly find documents that are similar in content to a user query. With the growing popularity of RAGs and LLMs, most Databases now support vector operations.

- Native: Chroma DB, Pinecone, etc

- Support: BigQuery, Databricks, Snowflake, etc

2. Creating a Vector Store in BigQuery

Given that I wanted to compare Vector search with Google’s Semantic search, creating a vector store in BigQuery makes sense. We’ll be using Langchain for this purpose. I’ve written a simple PDF loader and document splitter in Langchain. We will use the text and metadata for create the vector store.

Creating an empty store

Langchain modules make it very easy to create a vector store in BigQuery. It creates a table with document ID, text content, embedding values, metadata, etc which can be queried as a standard table or accessed via its extended methods.

from langchain_google_community import BigQueryVectorStore

from langchain_google_vertexai import VertexAIEmbeddings

embedding = VertexAIEmbeddings(

model_name="textembedding-gecko@latest", project=PROJECT_ID

)

store = BigQueryVectorStore(

project_id=PROJECT_ID,

dataset_name=DATASET,

table_name=TABLE,

location=REGION,

embedding=embedding,

)Chunking documents

Here, we will be building a very simple method to chunk the documents and attach some metadata with them which can be loaded in the Vector Store created above.

from langchain_community.document_loaders import PyPDFLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

import os

documents = []

for file in os.listdir(pdf_folder_path):

if file.endswith(".pdf"):

loader = PyPDFLoader(pdf_folder_path + file)

documents.extend(loader.load())

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=20,

length_function=len,

is_separator_regex=False,

)

doc_splits = text_splitter.split_documents(documents)

text = [doc.page_content for doc in doc_splits]

metadata = [doc.metadata for doc in doc_splits]Loading and querying the store

There are many methods that allow you to load, delete, and query the store. More details on the available methods can be found here.

store.add_texts(text, metadata)query = "Tell me something about the Xia dynasty"

docs = store.similarity_search_with_score(query, k=5)

print(docs)What is Vertex AI Agent builder?

Vertex AI Agent builder is a low code or no code solution to create enterprise-grade generative AI applications using Google’s foundation LLM models, search technologies and grounding mechanisms. It contains 2 main features.

- Agents: Using Vertex AI Agents, you can provide new and engaging ways for users to interact with your product.

- Search: Vertex AI Search is a fully-managed platform, powered by large language models, that lets you build AI-enabled search and recommendation experiences for your public or private websites or mobile applications.

With the Search feature, we can get high-quality search experience without needing to implement and maintain systems that perform keyword searches or pattern matching. This uses some of the same algorithms that are used by Google Search as its built on Google’s deep expertise and decades of experience in semantic search technologies

Creating a search agent

Its quite easy to build a Search agent using Vertex AI agent builder. Here I’ll be showing the ClickOps way to show how easy it can be for non-technical people to create their own RAGs using this approach. There is SDK support as well but will not go through the code in this article.

Creating a Data Store

Navigate to Agent Builder > Data Store

Google allows many data source like websites, APIs, Google Drive, GCS, Gmail, and so on.

For this article, we will use PDF files in GCS and proceed with default parameters.

Limitation: Only the following regions are supported: US, EU, Global

Creating Search Agent

Navigate to Agent Builder > Apps > Search

For this article, we will follow default parameters. In the data section, select the data store we created in the previous step.

NOTE: The agent gets created right away, but it will behave weirdly for a few minutes. So, wait for 10-15 minutes to test it out.

There is a Preview tab to run your search queries. We can play with the configurations to tune the performance, but for our purpose, I’ll be keeping the parameters to default to have a fair comparison with Vector queries.

Integrations

The out of the box solution has a great preview as shown above, its also easy to connect to this agent via API, and also embed the widget in websites, apps, etc.

Comparison

The data I’ve loaded in both the systems is History From Wikipedia which is available on Kaggle

Query 1: Tell me something about the Xia dynasty

Results from Vector Store (No LLMs to summarise the answer)

Results from Search Agent (LLM enabled in the to summarise)

Query 2: What happened in 500BC?

Results from Vector Store (No LLMs to summarise the answer)

Results from Search Agent (LLM enabled in the to summarise)

As you can see, the results from the Search Agent is much relevant with default parameters, as opposed to Vector search with no tuning. Not saying that Vector Store is not good, its just the results from Google’s Semantic Search are better with little to no tuning. With custom tuning, chunking, embedding, vector store would probably perform better. Eventually, it a balance between performance, efforts, and cost.

Summary / TLDR

In this article, we discussed how to create a simple Vector store using Langchain and how to create a Search agent in Vertex AI Agent builder. Both approaches have their own pros and cons, as outlined below:

| Vector Search | Vertex AI Semantic Search | |

|---|---|---|

| Code Requirement | Medium – High | Low – High |

| Tuning options | High | Low |

| Learning curve | Medium – High | Low |

| Performance out of the box | Medium | Medium – High |

| Performance peak | Very High | High |

| Documentation (Langchain) | Medium – High | Low – Medium |

| Efforts | Medium – High | Low – Medium |

| Integration with apps | High (Custom) | High (Out of the box) |

| Customisation | High | Low |

What method to apply for your next RAG application depends on your use case. If you need to demonstrate a proof of concept (POC) quickly, I’d recommend using the Search Agent. Similarly, if you need to deploy a solution with low code and are looking for standard outputs, the Search Agent is also a good choice. However, if your project has strong specifications and requires the flexibility to choose specific LLMs, embeddings, etc., then a custom-built application on a Vector Store like BigQuery would be more suitable.

In conclusion, while both methods have their strengths, your decision should consider the specific requirements of your project, balancing factors like ease of use, customisability, and performance.